| Concept | Description / Commands |

|---|---|

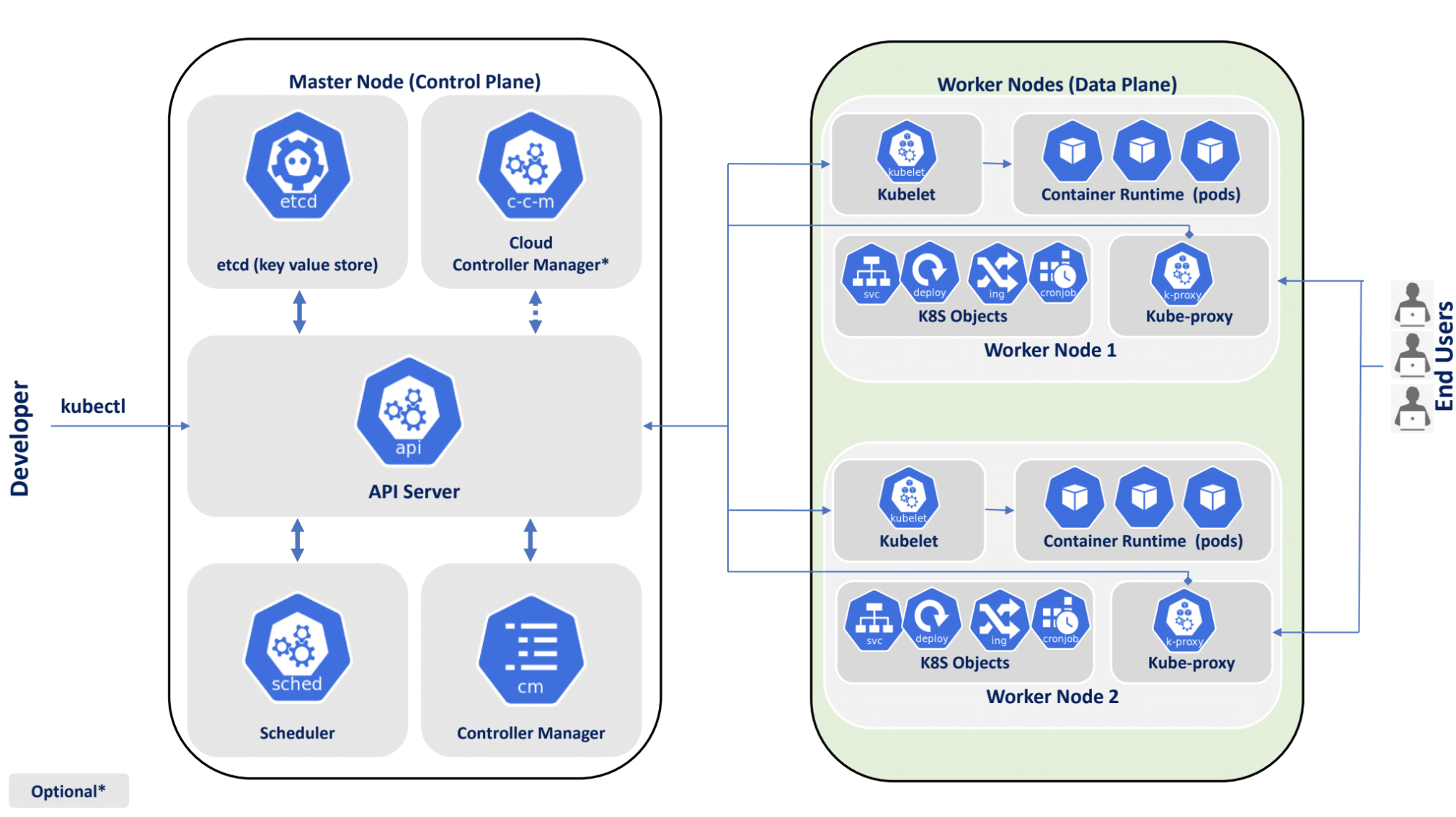

| Kubernetes Cluster | A collection of machines (nodes) that run containerized apps. Includes a Control Plane (manages cluster) and Worker Nodes (run workloads). |

| Control Plane | Comprises API Server, etcd, Scheduler, and Controller Manager. Oversees and coordinates the cluster. |

| etcd | Consistent and highly available key-value store used for cluster state data (e.g., what Pods exist). |

| API Server | Front-end to the Kubernetes control plane. All operations must go through it (kubectl, Kube UI, etc.). |

| Scheduler | Assigns new Pods to nodes based on resource requirements and constraints. |

| Controller Manager | Runs controllers that handle routine tasks (e.g. node controller, replication controller). |

| Worker Node | Executes your containers via Pods. Runs essential components like kubelet, kube-proxy, and the container runtime. |

| Kubelet | Primary node agent. Watches for work from the API Server and manages containers (e.g., start/stop Pods). |

| Kube-Proxy | Network proxy that runs on each node, maintains network rules for Pod-to-Pod communication across nodes. |

| Container Runtime | Software responsible for running containers (e.g., Docker, containerd, CRI-O). |

| Namespace | Logical cluster partition for separating resources. Default namespaces include 'default', 'kube-system', etc. |

| Pod | Smallest deployable unit in Kubernetes. Encapsulates container(s), storage resources, IP, and options. |

| ReplicaSet | Ensures a specified number of identical Pods are running. Often managed by a Deployment. |

| Deployment | Higher-level abstraction that manages ReplicaSets and Pod templates, enabling rolling updates and rollbacks. |

| DaemonSet | Ensures a copy of a Pod is running on all (or some) nodes. Good for logging or monitoring agents. |

| StatefulSet | Manages stateful applications, ensuring stable identities and storage for each Pod (e.g., databases). |

| Service | Stable network endpoint that exposes a set of Pods (e.g., ClusterIP, NodePort, LoadBalancer). Balances traffic across Pods. |

| Ingress | Manages external access to services. Typically requires an Ingress Controller (like NGINX) to route traffic. |

| PersistentVolume (PV) | A piece of storage in the cluster that has been provisioned by an admin or dynamically. |

| PersistentVolumeClaim (PVC) | A request for storage by a user. Binds to an available PersistentVolume. |

| ConfigMap | Stores non-confidential config data as key-value pairs. Mounted as files or environment variables in Pods. |

| Secret | Stores sensitive data (e.g., passwords, tokens). Similar to ConfigMap but is base64-encoded and more secure. |

| Job | Creates one or more Pods to perform a task until successful completion (batch-like tasks). |

| CronJob | Schedules Jobs at specific times or intervals. Uses standard UNIX cron format. |

| Volume | Abstracts storage for a Pod. Multiple types (emptyDir, hostPath, NFS, etc.). Lifecycle tied to the Pod (unless external like NFS). |

| Basic Commands |

- kubectl get pods/nodes/services/etc. - kubectl describe pod/<podname> - kubectl logs <podname> - kubectl exec -it <podname> -- /bin/bash - kubectl apply -f <filename.yaml> - kubectl delete -f <filename.yaml> |

| Common YAML Fields | apiVersion, kind, metadata, spec. Refer to official docs for each resource’s schema. |

| Rolling Update / Rollback |

Achieved via Deployments. Example: kubectl rollout status deployment/<dep-name> kubectl rollout undo deployment/<dep-name> |

| Tips |

- Use labels and selectors effectively. - Resource requests/limits help with scheduling. - Namespaces isolate resources. - Monitor logs and metrics for cluster health. |

Below is a simple, plug‐and‐play set of YAML files you can use to deploy a basic application on Kubernetes. Think of these files as recipes that tell Kubernetes how to cook up your app.

This file tells Kubernetes to create and manage a set of “pods” (small boxes running your app).

apiVersion: apps/v1 # API version of the Deployment object

kind: Deployment # We are creating a Deployment

metadata:

name: my-app-deployment # The name of this deployment

labels:

app: my-app # Labels help group and identify your app's resources

spec:

replicas: 3 # Number of copies (pods) to run

selector:

matchLabels:

app: my-app # This tells the deployment which pods to manage (by matching labels)

template:

metadata:

labels:

app: my-app # Pods get this label so they can be found by the Service later

spec:

containers:

- name: my-app-container # Name for the container (like a nickname)

image: nginx:latest # The container image to use (replace with your own if needed)

ports:

- containerPort: 80 # The port the container listens on

envFrom:

- configMapRef: # Load environment variables from a ConfigMap

name: my-app-config # This ConfigMap is defined in another file (see below)

This file creates a Service, which is like a phone line that connects users to your app. It routes traffic to the pods created by the Deployment.

apiVersion: v1 # API version for the Service object

kind: Service # We are creating a Service

metadata:

name: my-app-service # Name of the service

spec:

selector:

app: my-app # This Service finds pods with the label "app: my-app"

ports:

- protocol: TCP

port: 80 # Port that the service exposes

targetPort: 80 # Port on the pods where traffic should go

type: ClusterIP # Service type (ClusterIP means it's only available inside the cluster)

This file creates a ConfigMap to store configuration data (like environment variables) for your app. It helps keep configuration separate from your code.

apiVersion: v1 # API version for ConfigMap

kind: ConfigMap # We are creating a ConfigMap

metadata:

name: my-app-config # Name of the ConfigMap

data:

EXAMPLE_ENV: "Hello, Kubernetes!" # An example environment variable

This file sets up an Ingress, which acts like a door for your app from the outside world. It routes incoming web traffic (from a domain name) to your Service.

apiVersion: networking.k8s.io/v1 # API version for Ingress

kind: Ingress # We are creating an Ingress

metadata:

name: my-app-ingress # Name of the Ingress

spec:

rules:

- host: myapp.example.com # Replace with your own domain name

http:

paths:

- path: / # URL path to match

pathType: Prefix # How the path matching is done

backend:

service:

name: my-app-service # The Service that will receive the traffic

port:

number: 80 # The port on the Service to use

Open your terminal and run the following commands (assuming you have access to a Kubernetes cluster):

kubectl apply -f configmap.yaml

kubectl apply -f deployment.yaml

kubectl apply -f service.yaml

kubectl apply -f ingress.yaml